The chatbot that once asked “Press 1 for billing” can now autonomously process your refund, update your account, and schedule a follow-up call.

What we’re witnessing is the fourth major evolution in AI-human interaction, from rigid rule-following systems to autonomous agents that can reason, adapt, and take action across complex workflows.

This progression from rule-based chatbots to conversational AI to generative AI to agentic AI represents a natural but revolutionary path that’s transforming how enterprises automate work.

Each generation builds on the last, but agentic AI fundamentally changes the game: instead of just generating responses, these systems execute multi-step tasks independently.

That shift from read-only to read-write creates unprecedented automation opportunities and new security challenges.

Once AI systems can modify databases, trigger workflows, and execute actions autonomously, they become powerful non-human identities that must be authenticated, authorized, and governed. Without proper identity and access controls, agentic AI doesn’t just scale productivity, it scales risk.

Generation 1: Rule-Based Chatbots (1990s-2010s)

The first wave of AI-powered interaction was barely “intelligent.” These early chatbots followed rigid if-then rules, executing predetermined decision trees rather than interpreting user intent. They lived inside phone trees and basic web widgets, waiting for someone to press the right number or type the exact keyword to trigger a canned response.

This rule-based design defined their interaction model. There was no actual conversation, just keyword matching and menu-driven prompts that pushed users down a fixed path. If a customer went off-script, the system broke.

Even with these limitations, they delivered clear enterprise value. Rule-based bots could handle simple, repetitive tasks like resetting passwords, sharing store hours, or deflecting basic FAQs without human involvement. That meant fewer calls for support teams to handle and more consistent responses for customers.

But they were brittle and inflexible. Every new rule had to be programmed manually, and even small changes often required developer intervention. If a customer’s question didn’t match an existing rule, the bot failed and handed the conversation off to a human agent.

Examples:

- Early IVR phone systems (“Press 1 for English”).

- Web chat widgets with predefined responses.

- Simple customer service bots that escalated to humans frequently.

Why enterprises adopted them:

- Reduced call center volume for common, low-complexity queries.

- 24/7 availability without extra staffing costs.

- Consistent answers to standard questions.

Generation 2: Conversational AI (2010s-2020)

The second generation marked a real leap forward. Instead of matching keywords, these systems could finally understand what people meant. Powered by machine learning models trained on large datasets, conversational AI could recognize intent, extract relevant entities, and maintain context across multiple turns in a conversation.

This shifted the interaction model from rigid decision trees to dynamic conversation flows. Users no longer had to phrase their questions perfectly or follow scripted prompts, the system could interpret natural language and respond accordingly. It was the first time AI could mimic the back-and-forth rhythm of human conversation.

For enterprises, this meant customer interactions became more sophisticated and far less dependent on human intervention. Bots could now handle moderately complex inquiries like checking order statuses, scheduling appointments, or answering nuanced product questions without escalating to a live agent. That reduced training time for human agents and allowed support teams to focus on higher-value work.

Examples:

- Consumer voice assistants like Amazon Alexa and Google Assistant.

- Enterprise platforms like IBM Watson Assistant.

- Customer service bots capable of multi-turn conversations.

The enterprise impact:

- Significantly higher containment rates for customer service.

- Reduced agent training time as bots took on more complexity.

- Improved customer experience through natural, fluid conversations.

Still, they had clear boundaries. These systems were reactive, they waited for a prompt instead of taking initiative. They could only operate within a single domain, and they couldn’t take actions outside their specific environment. The conversation was more natural, but it still ended at the edge of their sandbox.

Generation 3: Generative AI (2020-2023)

If conversational AI made machines sound human, generative AI made them think like one, or at least seem to. This era introduced large language models trained on massive datasets, capable of synthesizing information from across domains and producing original, human-like content on demand.

The interaction model became truly open-ended. Instead of following conversation flows, these systems could answer any question, draft entire documents, write code, or explain complex concepts in plain language. They didn’t just recognize intent, they generated knowledge.

For enterprises, this changed the pace of work. Generative AI tools accelerated knowledge work, slashed content production timelines, and lowered the barrier to entry for complex tasks. Non-technical employees could now use AI to generate marketing copy, analyze reports, or summarize research that would have previously required specialist skills or dedicated teams.

Examples:

- ChatGPT reshaping how people interact with AI.

- GitHub Copilot transforming software development workflows.

- Enterprise-grade implementations for large-scale content creation and analysis.

The enterprise transformation:

- Major productivity gains, employees completed knowledge work faster.

- Democratization of advanced capabilities for non-technical users.

- Scaled content generation for documentation, marketing, and analysis.

But this generation had a critical gap: it could produce answers, not take action. Generative AI stopped at the point of suggestion, humans still had to implement its outputs. It operated entirely in the realm of text, unable to directly modify systems, trigger workflows, or execute tasks. It was powerful, but passive.

Generation 4: Agentic AI (2023-Present)

Generative AI could answer questions. Agentic AI can achieve goals.

This new era combines multi-step reasoning with tool usage and autonomous execution, allowing AI systems to operate not just as assistants but as independent actors inside enterprise environments.

The interaction model has shifted from conversation to collaboration. Instead of waiting for prompts, agentic systems pursue objectives, adapt their approach when they hit roadblocks, and persist across complex workflows until they complete the task. They’re not just talking about work anymore, they’re doing it.

The key breakthrough is their integration with external systems. These agents can call APIs, update databases, move data between platforms, and trigger actions across software environments. They maintain context over time, handle errors by adjusting strategies, and, most importantly, act proactively. They don’t just respond to user requests; they initiate work on their own.

What makes this different:

- Tool integration: Can interact directly with APIs, databases, and external services.

- Persistent execution: Maintains context and keeps working toward goals over time.

- Error handling: Adjusts approach when conditions change or initial methods fail.

- Proactive behavior: Initiates actions instead of waiting for instructions.

Enterprise applications emerging:

- Software development: Agents that write, test, and deploy code autonomously.

- Customer operations: Agents that research issues, implement fixes, and follow up with customers.

- Data analysis: Agents that query systems, generate insights, and build reports.

- DevOps: Agents that monitor infrastructure, diagnose issues, and remediate problems automatically.

Agentic AI closes the loop that previous generations left open. It no longer hands off instructions to humans, it carries them out, end to end.

The Enterprise Value Progression: Why Each Generation Mattered

Each generation of AI didn’t just make systems smarter, it fundamentally reshaped how work gets done.

- Rule-based chatbots delivered the first clear ROI by deflecting simple support tickets and cutting costs, but they were narrow and rigid.

- Conversational AI expanded that efficiency by containing more complex interactions, reducing agent workload, and improving customer experience, unlocking scale without proportional headcount growth.

- Generative AI shifted the value curve entirely. It stopped being about efficiency and became about multiplication, dramatically accelerating knowledge work and enabling anyone to produce high-quality output at scale.

- Agentic AI now goes further: it takes over the execution layer itself, automating entire workflows from end to end. This isn’t about speeding up human work, it’s about replacing whole chains of manual effort with autonomous systems.

Efficiency Evolution

| Generation | Efficiency Gain | Primary Value |

| Rule-based | 20-30% reduction in simple support tickets | Cost deflection |

| Conversational | 50-60% improvement in containment rates | Better customer experience |

| Generative | 3-5x faster content creation and analysis | Productivity multiplication |

| Agentic | End-to-end automation of complex workflows | Labor transformation |

The Compounding Effect

Each leap built directly on the last. Early adopters of rule-based systems had a head start when conversational platforms arrived. Those who embraced generative AI quickly turned that lead into exponential output gains.

Now, agentic AI threatens to widen the gap even further. Organizations that can hand off entire processes to autonomous systems will operate at a level of scale and speed their competitors simply can’t match. Laggards won’t just be slower, they’ll be structurally disadvantaged.

The Infrastructure Challenge: Why Identity Becomes Critical

Generations 1 through 3 were essentially read-only. They consumed information, generated responses, and handed the output back to humans to act on. Agentic AI changes that equation. It’s read-write, actively modifying systems, triggering workflows, and executing real-world actions on its own.

That shift makes identity and access control non-negotiable. Once an AI system can act, it stops being just a tool and becomes a powerful non-human actor inside the enterprise. And like any actor with system-level permissions, it must be authenticated, authorized, and governed.

Why Traditional Security Falls Short

Most current security models were built for humans, not autonomous agents. They don’t hold up when AI begins taking actions across environments at machine speed.

Static API keys can be copied, leaked, or misused—far too fragile for systems operating without human oversight. Human-centric authentication models like passwords, SSO sessions, and MFA simply don’t apply to agent workflows.

Cross-system access creates sprawling credential sprawl that’s difficult to manage or rotate securely. And policy enforcement must operate at machine speed and scale, not through manual reviews or ticket-based approvals.

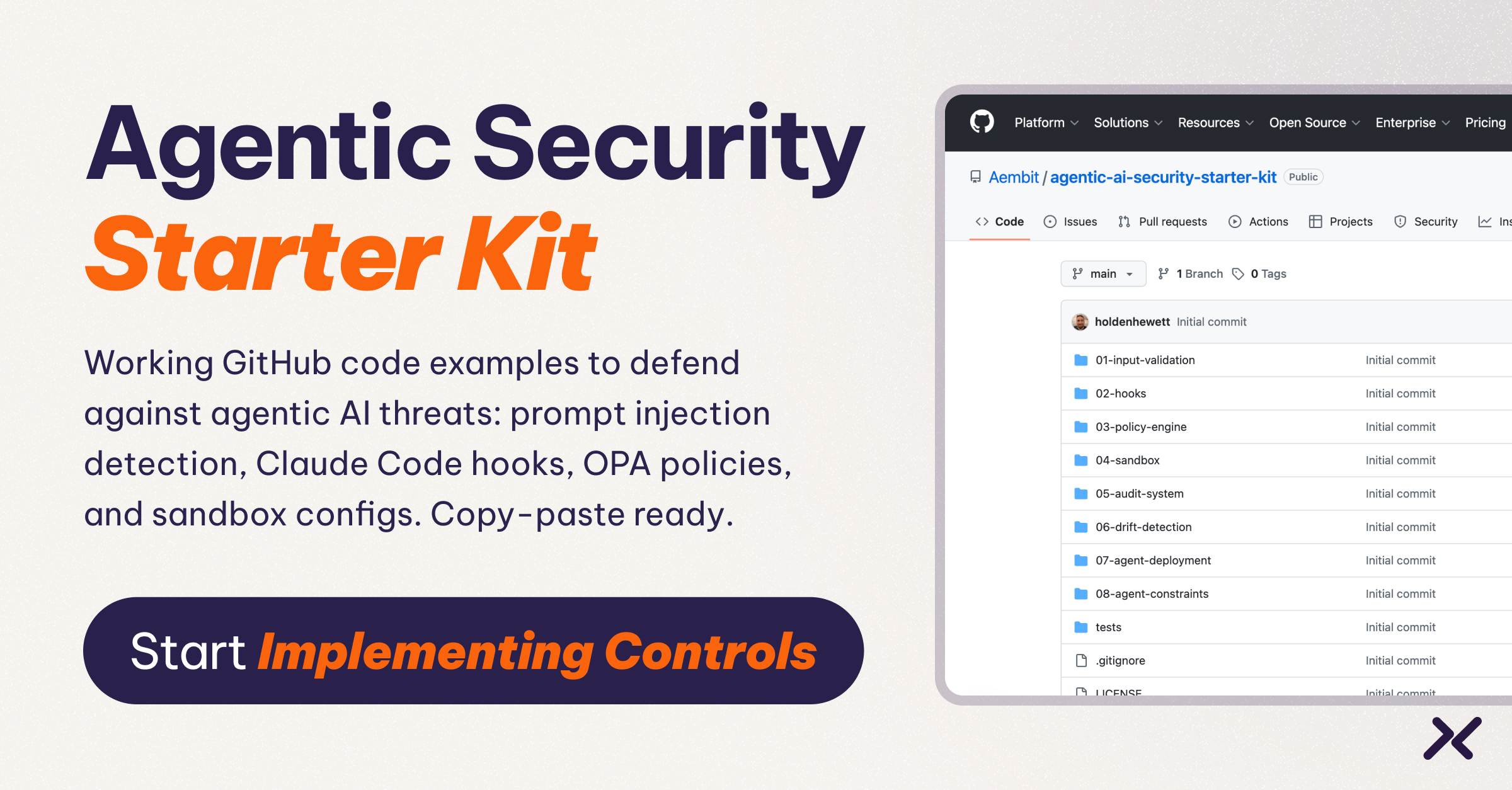

New Identity Requirements

Enterprises adopting agentic AI will need identity architectures designed specifically for non-human actors. That includes:

- Machine-to-machine authentication so agents can securely connect to multiple systems and services

- Dynamic permissions that adjust access based on the agent’s current task, context, and risk level

- Audit and compliance logging for every autonomous action, making activity attributable and reviewable

- Policy enforcement boundaries that ensure agents operate only within pre-defined, controlled scopes

Without these controls, agentic AI becomes a security blind spot, one with the power to move data, deploy code, and change infrastructure at scale.

The Strategic Implications: What This Means for Enterprises

Agentic AI changes how work is structured, how teams operate, and how value is created. Organizations that understand this early will gain a decisive advantage. Those that don’t will be left competing with fundamentally slower operating models.

The window for early adoption is short. Companies deploying agentic AI effectively will execute complex workflows at scale with minimal human intervention, while competitors burn more time and headcount to achieve the same outcomes.

This transformation forces a rethink of how work is organized—human roles shift from execution to direction and oversight, workflows get redesigned for continuous automation, and tasks that once required teams can now be handled by individual agents working around the clock.

Risk Considerations

With that opportunity comes real risk. Autonomous systems don’t just scale output, they scale failure.

The security challenges go beyond traditional concerns. Agents with excessive permissions can enable lateral movement across systems, moving from a compromised service to sensitive data stores or production infrastructure.

Without proper boundaries, agents can evade policy controls by chaining together seemingly innocent API calls to achieve unauthorized outcomes. And when AI-driven errors compound (one agent’s mistake triggering cascading failures across dependent systems) the blast radius expands rapidly.

The identity gap makes these risks worse. Shared service accounts eliminate traceability; when multiple agents use the same credentials, you can’t attribute actions to specific workflows or determine which agent caused an incident. Traditional identity systems can’t assign dynamic, context-based permissions that adjust as an agent’s task or risk level changes.

Also, there’s no lifecycle management for agent identities: no clear process for creation, no automated revocation when agents are decommissioned, and incomplete audit trails that leave gaps in accountability.

Failing to address these structural issues erodes trust in the entire automation effort.

Conclusion: The Widening Gap

Agentic AI is the logical next step in a progression that started with simple menu-driven chatbots. But its impact will be radical. The shift from read-only to read-write AI fundamentally changes what’s possible, and what’s at stake.

The gap between AI leaders and laggards will widen quickly. Organizations that master agentic systems will operate with dramatically less friction and cost, executing complex workflows at machine scale. Those that wait will find themselves competing against fundamentally faster operating models.

But none of this works without the right foundation. Agentic systems depend on identity architectures built for non-human actors; machine-to-machine authentication, dynamic permissions, policy enforcement at machine speed, and comprehensive audit trails. Without these controls, adoption stalls or creates uncontrolled security exposure.

The organizations moving now are assessing where agentic capabilities deliver value, evaluating whether their identity systems can handle autonomous agents at scale, and building governance frameworks that define clear boundaries for AI behavior. They’re building organizational muscle while the window is still open.

The next decade belongs to whoever acts first. Everyone else will spend it trying to catch up.