It’s not DNS

There is no way it’s DNS

It was DNS.

–Unknown wise person

The bug

As usual, at the beginning there was a bug. We stumbled on an unexpected behavior while doing some testing. Sometimes (around 5% of cases) it took longer to establish connections via Aembit Edge. (Aembit Edge, by the way, is our product’s transparent proxy, which intercepts access attempts and then queries Aembit Cloud. Once authorized by Aembit Cloud, the Edge injects credentials into validated requests.)

Back to the problem: Connections going through Aembit Edge were timing out sometimes (with even lower probability). Part of our Aembit DNA is to not leave any stones unturned. As a result, we rolled up our sleeves and started to investigate.

Sync vs Async

It took us probably a couple of days to realize that our whole edge component sometimes freezes for about 10 seconds. Then, it was just a matter of methodical bug localization to get to the root cause after finding this symptom.

We found that in one place we accidentally used synchronous DNS resolution (Rust-native ToSocketAddrs). And it’s a big no-no to use such code in Rust asynchronous applications. The documentation is very clear: “Note that this function may block the current thread while resolution is performed.”

The nice thing was that the fix was trivial. We dropped in Tokio async replacement for this API, and it immediately solved timeouts. The funny (and a bit embarrassing) part is that I was involved in troubleshooting exactly the same bug (sync DNS resolution in async application) in my previous company.

Anyway, we thought that it would be it. But I still had a nagging question: Why was DNS resolution taking such a long time (and causing it to block the thread)?

Taking into account that I saw several DNS-related errors in the logs, I felt that there could be some underlying infrastructure problem. Apparently, we were naive. This was just the beginning.

Kernel networking/iptables and friends

As we deployed a new build, the timeouts disappeared, but we still saw connections taking longer than expected (the same 5% of the time) and still were witnessing some strange behavior around DNS.

As I mentioned initially, we suspected issues with infrastructure. However, we were able to reproduce the issue in another environment, which removed this suspicion.

I will spare you most of the details of how we were beating our head against the wall for probably a week to collect enough information to devise a theory explaining what was happening.

There were a couple of things that we noticed during this time. And these were the proverbial dots which we tried to connect:

- DNS-related errors – specifically around our code which proxy DNS requests.

- The issue only happened when original DNS requests (coming from a client workload) passed through iptables (and redirected toward our component).

- The system reported that some packets’ destination port was unreachable.

Now we were able to dive into the good stuff. What we found is that we accidentally stumbled on a race condition in Linux networking.

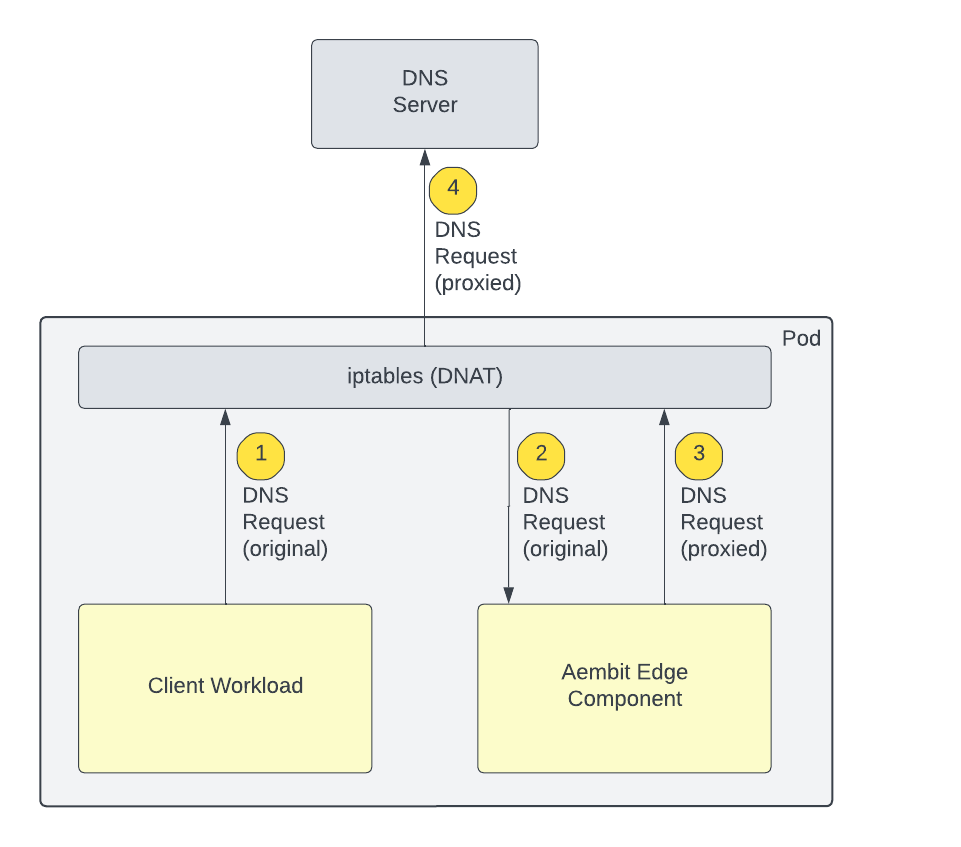

Here is some background/visualization of our setup:

- One of Aembit Edge components runs as a sidecar to the client workload. As a result, they share network namespace, including IP.

- We redirect DNS traffic originating from client workload toward our component. This is done via following iptables rule:

iptables -t nat -A $chain_name -p udp –dport 53 -j REDIRECT –to 8053

- Our edge component processes DNS requests and sends it unaltered to the original DNS server. And we make sure that we exclude our own component from this redirection (to prevent our egress traffic to loop back to us). This is done by following rule:

iptables -t nat -A $chain_name -m owner –uid-owner $aembit_user_id -j RETURN

And here is what we found (aka the root cause).

- The client workload does a DNS request. It creates a socket and binds to an ephemeral port and sends the request.

- The iptables NAT rule shown above overwrites the destination port to redirect it to our edge component.

- Our edge component processes the DNS request, sends it to the original DNS server and vice versa (receives a response and returns the response back to the client workload).

All of this goes through the netfilter (iptables) facility. And netfilter saves both forward and backward path as a “connection” by conntrack.

The interesting part is that netfliter doesn’t try to evaluate all rules for each packet, but rather for performance reasons uses connection info in conntrack (until the entry expires). (The expiration for such UDP flow is 180 seconds.)

As a result, any udp datagram from the client workload or Aebmit Edge component IP (taking into account that they share the same IP) and from this ephemeral port going to DNS server host/port is handled based on conntrack info (versus all iptables rules being reevaluated).

This all works well, until the client workload releases that socket (and the ephemeral port becomes available to other apps). And here comes the race condition. The entry will expire in 180 seconds, while the ephemeral port becomes bindable pretty much immediately.

Our component was also using an ephemeral port to communicate with the DNS server. And sometimes the system returned to us ephemeral port which was recently used (and still is tracked by conntrack). And if we tried to send proxied DNS requests to the DNS server from this ephemeral port, all five tuples (source IP and port and destination IP and port and protocol) looked *exactly* the same as for an original connection from the client workload to the DNS server. Netfilter happily ignored the rule (to check process owner) and used conntrack info to redirect it back our own traffic back to our component, creating a loop.

So, the bug ended up being a race condition between conntrack entry expiration and the ephemeral port being released. I wish when the port was released, they were clearing appropriate entries in conntrack. However, there is a good chance that I am missing some edge cases around SO_REUSEPORT or something else which would break if the entry is erased.

The fix

One potential fix was trivial. We don’t have to use an ephemeral port, so we will never stumble on a recently used ephemeral port. However, the direction in which our code is moving makes this solution temporary for us. As a result, we were hesitant to use it.

The nice thing is that my colleague (Will Springer) was able to find a cleaner solution to not pass egress DNS traffic from our app through conntrack.

iptables -t raw -A OUTPUT -p udp –dport 53 -m owner –uid-owner $aembit_user_id -j NOTRACK

The raw table is evaluated before usage of the conntrack table to do NATing and works well for us (with some negligible performance hit). As soon as we put the fix in place, these random timeouts around DNS proxying disappeared and tcpdump stopped indicating that there were UDP datagrams that were dropped.

Summary

Most of the time, I (and the rest of our edge team) work on application level. It was an incredibly interesting exercise to dive a level down to investigate and troubleshoot such problems. And we were extremely proud to tackle it.

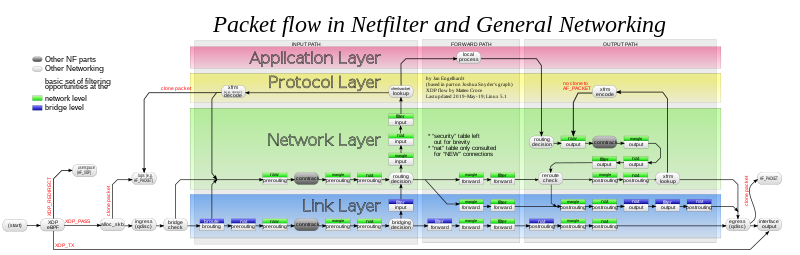

And finally, if you ever find yourself in a need to understand netfilter, I strongly recommend reviewing this amazing diagram (originally created by Jan Engelhardt and living here on Wikipedia).

For more information about Aembit or to get started with our Workload IAM platform for free, visit aembit.io.