The explosion of AI agents and large language models (LLMs) is reshaping how we think about automation, decision-making, and technology in general.

AI agents are no longer just tools we deploy – they are active participants in increasingly complex ecosystems, handling everything from customer support to infrastructure management, often autonomously.

But as their roles expand, so do the challenges and opportunities around managing their interactions, securing their actions, and ensuring accountability.

The key to navigating this shift lies in understanding when to rely on non-human identity and access management (IAM) systems, when non-human governance tools are more appropriate, and when a combination of both is necessary. Each plays a distinct role in managing the lifecycle, security, and accountability of AI agents – entities that, while not human, wield significant power.

As AI agents continue to evolve and expand their roles, there are several critical areas to focus on. Below, we explore five key aspects of managing non-human identities and ensuring their secure, compliant, and efficient operation.

1) Rapid Growth: AI Proliferating Across Industries

AI agents are spreading rapidly across industries, taking on sophisticated roles. In finance, they’re detecting fraud and optimizing trading strategies. In health care, they’re analyzing medical records to suggest treatment plans. These non-human tools are everywhere, automating mundane tasks and performing complex processes without constant human oversight.

With this proliferation, organizations face the challenge of managing a rapid influx of active participants in their systems. But managing an AI agent is not a one-size-fits-all task. Some agents perform simple functions with minimal access needs, while others require deep integration into critical systems, demanding a more careful approach.

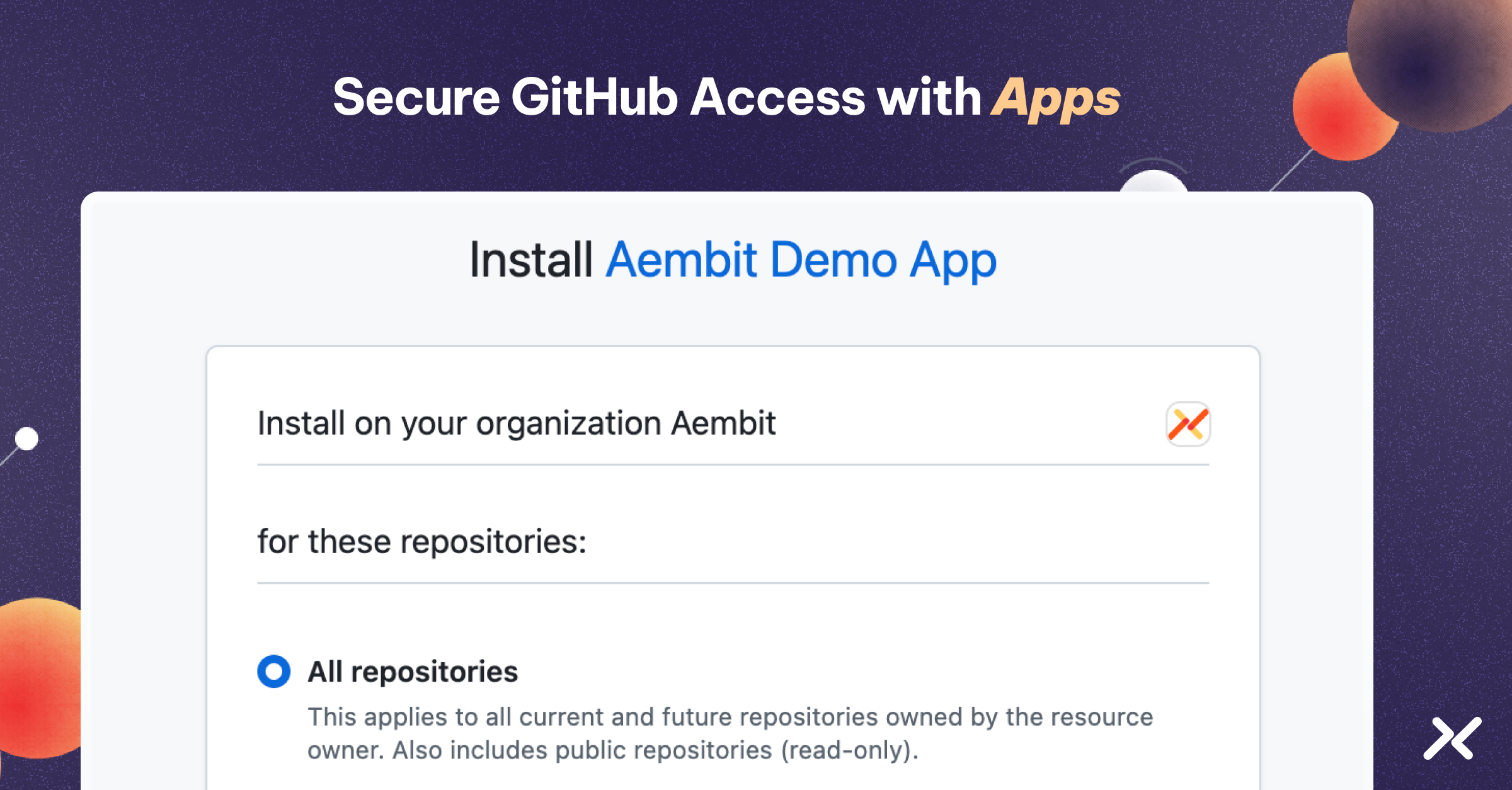

Where Non-Human IAM Matters: For AI agents handling sensitive data or interacting with secure systems, non-human IAM solutions are essential. They provide the infrastructure to securely provision, manage, and revoke the credentials of these non-human entities, ensuring that only authorized agents have access to critical resources. Non-human IAM helps organizations manage the exponential growth of non-human identities, particularly in environments where AI agents operate at scale and touch multiple systems.

When Non-Human Governance Takes Priority: For AI agents making business-critical decisions – for example, approving loans, managing medical diagnoses, or generating financial reports – non-human governance tools play a larger role. These agents need to operate within strict policy frameworks to ensure their actions align with business rules, legal requirements, and ethical considerations. Governance systems help track decisions, monitor compliance, and ensure that these non-human agents’ autonomy doesn’t lead to unintended consequences.

2) Dynamic Behavior: The Need for Flexibility

AI agents are not static. Their ability to learn, adapt, and perform tasks in novel ways is what makes them so powerful. However, this dynamic behavior poses a significant challenge: How do you manage their identities and permissions when their roles are constantly evolving?

Non-Human IAM’s Role in Flexibility: IAM solutions designed for non-human identities are particularly useful in managing the lifecycle of these evolving AI agents. Dynamic access controls, for example, allow AI agents to be granted permissions based on real-time context rather than static roles. This means that as the non-human agent’s tasks change – whether it’s accessing new datasets or interacting with a different system – its access can be adjusted accordingly. Non-human IAM ensures agents only have the permissions they need at a given moment, reducing risk and preventing over-privileged access.

Governance Tools for Non-Human Accountability: Non-human governance systems ensure that even as AI agents adapt and change, there is clear oversight. Governance systems can track the decisions these non-human agents make, providing audit trails and ensuring compliance with both internal policies and external regulations. In fast-changing environments, governance tools are quite helpful for maintaining accountability.

When They Work Together: In highly regulated industries, such as finance or healthcare, the combination of non-human IAM and governance provides complementary strengths. While non-human IAM controls the access AI agents have, governance tools monitor how that access is used. Together, they ensure agents remain secure and compliant as they perform dynamic tasks.

3) Threats: The Expanding Attack Surface

With AI agents performing increasingly sensitive and autonomous tasks, the attack surface is growing. These non-human agents are accessing critical systems, processing sensitive data, and even making decisions that affect the operational state of entire infrastructures. Naturally, they become high-value targets for cyberattacks.

Zero-Trust IAM for Non-Human Agents: A Zero Trust approach to security, built on strong non-human IAM foundations, is key. Non-human agents must be authenticated at every step, and their access should be continuously verified. Non-human IAM systems enable this by ensuring agents are given the minimal necessary permissions, preventing lateral movement within systems if one agent is compromised.

Non-Human Governance for Risk Mitigation: Security often gets a bad rap as being solely about restricting access – but it’s equally about monitoring actions and ensuring accountability. Non-human governance tools provide important oversight, flagging anomalous behavior and ensuring AI agents operate within predefined risk thresholds. While non-human IAM locks down access, governance ensures even authorized actions are reviewed and analyzed, particularly when agents engage in high-stakes decision-making.

When They Work Together: In sensitive environments like cloud infrastructure management, AI agents not only need tight access controls (non-human IAM) but also real-time monitoring (non-human governance). Here, the combination of both ensures agents don’t misuse their access or make harmful decisions without oversight. This synergy of non-human IAM and governance is vital in reducing the overall attack surface while ensuring operational continuity.

4) Collaboration: Complexity in Coordination

In many ecosystems, AI agents don’t work in isolation. For example, in a supply chain scenario, one agent might monitor inventory levels, while another adjusts manufacturing schedules based on that data. Ensuring secure communication between non-human agents in these environments enables collaboration and prevents disruptions.

Non-Human IAM for Secure Inter-Agent Communication: When multiple AI agents interact across systems, non-human IAM helps create secure communication. By federating identities across platforms, non-human IAM solutions make sure each agent can authenticate itself to others without exposing credentials or creating security vulnerabilities.

Non-Human Governance for Coordinated Oversight: Governance tools manage the interactions between non-human agents, guiding their actions to align with organizational policies and compliance standards as AI systems collaborate on business decisions.

5. Accountability and Ethics: Managing Autonomous Decisions

As AI agents become more autonomous, they begin to make decisions that can have significant consequences. These decisions may affect customer experiences, financial outcomes, or even human health. In such scenarios, it’s vital to have mechanisms in place to ensure accountability.

Non-Human IAM for Identity Traceability: Non-human IAM ensures every action taken by an AI agent is traceable to its unique identity. This allows organizations to track not just the decisions made, but who (or what) made them, enabling swift responses to any issues or discrepancies.

Non-Human Governance Tools for Transparency: Governance tools provide the transparency needed to hold AI agents accountable. With audit trails, decision logs, and compliance checks, these systems track the actions of non-human agents, ensuring their decisions align with ethical guidelines and regulatory frameworks.

Conclusion

AI agents and LLMs are shaping how we work and innovate. But with this growth comes complexity. Managing these systems isn’t just about plugging security gaps or ticking compliance boxes – it’s about having the right controls to keep up with their evolving roles.

Non-human IAM tackles the foundational challenge: securing access as these agents interact with critical systems. But that’s only half the equation. Governance steps in to provide the necessary checks, making sure that the decisions these agents make are not only safe but also aligned with business priorities and ethical standards.

The real power lies in getting both right. IAM without governance leaves room for unchecked actions. Governance without IAM risks weak security. Together, they create a structure that’s flexible enough to handle the unpredictable nature of AI while keeping systems secure and accountable.

To see how Aembit can help, visit aembit.io.