Static secrets create persistent attack vectors that plague cloud-native environments.

API keys hardcoded in applications, credentials stored in configuration files, and shared tokens across microservices provide attackers with ongoing access once compromised. Modern applications amplify this problem. Thousands of services requiring database access, third-party API calls, and cross-system authentication create an unmanageable credential sprawl.

Dynamic authorization eliminates this fundamental vulnerability. It replaces stored credentials with real-time access decisions. Instead of managing static secrets, you verify workload identity through environmental attestation and issue ephemeral tokens based on context-aware policies.

Environmental attestation provides cryptographic proof that a workload is running in a trusted environment, such as a verified Kubernetes pod or authenticated cloud instance.

This article breaks down why static secrets fail in modern environments and provides concrete implementation strategies for deploying dynamic authorization across your infrastructure.

What Are Static Secrets, Rotated Secrets & Dynamic Authorization?

The distinction between these approaches centers on a fundamental architectural question: where does trust verification happen—at credential issuance or at access time?

- Static secrets establish trust once, then rely on possession for ongoing access. Your microservice authenticates to PostgreSQL using the same database password for months. The security model assumes credential possession equals authorization.

- Rotated secrets automate credential lifecycle management—rotating API keys monthly or quarterly—but maintain the same possession-based trust model. Teams can still retrieve these artifacts between rotations.

- Dynamic authorization shifts trust verification to access time. Workloads present cryptographic proof of identity, policies evaluate current context, and short-lived tokens grant specific permissions. No persistent credentials exist to steal.

The key difference is that rotated secrets reduce risk through shorter lifecycles, but dynamic authorization greatly reduces exposure risk. This architectural shift changes your operational model from “how do we protect stored credentials?” to “how do we verify workload authenticity at runtime?”

Why Static Secrets Fail in Cloud-Native Environments and What to do Instead

Cloud-native architectures expose the fundamental weaknesses of credential-based security models. Problems multiply as organizations scale their infrastructure and accelerate deployment cycles.

Credential Sprawl Becomes Unmanageable

Modern applications fragment into hundreds of microservices, each requiring database connections, API access, and third-party integrations. Development teams duplicate secrets across repositories, CI/CD pipelines, and deployment configurations. Security teams lose visibility into where credentials exist and which services depend on them.

Solution: You can track thousands of keys across multiple environments by eliminating stored credentials entirely.

Rotation Breaks More Than It Fixes

Manual rotation processes break applications when credentials update without coordinated deployment changes. Teams avoid rotation entirely, leaving credentials active for months. Automated rotation requires complex orchestration to prevent service disruptions.

Solution: Use tokens that expire automatically without requiring application updates, eliminating rotation coordination entirely.

The Secret-Zero Problem Persists

Every system needs initial credentials to access its credential store—creating a recursive authentication problem. Teams hardcode bootstrap credentials or rely on infrastructure-level secrets that defeat the security model.

Solution: Authenticate through cryptographic verification of your runtime environment instead of bootstrap credentials.

You can use signed cloud metadata from AWS EC2 instances, Kubernetes service account tokens, or container image signatures to prove workload identity. This eliminates the need for any stored “secret zero” to bootstrap the authentication process.

Compliance Gaps Leave You Exposed

Static credentials cannot attribute specific API calls to individual workloads or provide granular revocation when incidents occur. When auditors ask “which service accessed this database at 3 AM last Tuesday?” static credentials leave you with incomplete answers.

Solution: Generate detailed logs showing workload identity, requested resource, policy evaluation, and outcome for every access decision.

How Dynamic, Context-Aware Authorization Solves the Problem

The system operates through three interconnected components that work as an integrated flow. Environment attestation establishes workload identity without requiring stored credentials. Kubernetes pods present service account tokens, AWS workloads leverage IAM roles with STS token exchange, and container environments provide signed metadata that cryptographically verifies runtime authenticity.

This identity proof feeds directly into policy engines that evaluate every access request against real-time context. Location, time, workload posture, and resource sensitivity all influence authorization decisions. Unlike static role assignments, policies can examine dynamic factors—namespace, deployment environment, device posture, even time of day—making access decisions that adapt to current conditions.

After policy approval, just-in-time (JIT) credential brokers issue ephemeral tokens scoped to specific operations.

Brokers are specialized services that dynamically generate and inject access credentials, ensuring tokens typically expire within minutes and carry only the permissions necessary for immediate tasks.

The workload receives credentials exactly when needed and never stores them persistently.

System-Wide Benefits

This integrated architecture simultaneously eliminates the core problems that plague static secret management:

- Eliminates credential sprawl entirely: Workloads never possess stored credentials to duplicate across repositories, CI/CD pipelines, or deployment configurations. Platform teams stop tracking credential locations because there’s nothing to track.

- Removes rotation coordination problems: Tokens expire automatically without requiring coordinated updates across environments. Applications never experience rotation-related outages because they request fresh credentials for each operation rather than relying on stored values that need periodic updates.

- Resolves the secret-zero paradox: Workloads authenticate through cryptographic verification of their runtime environment, eliminating the recursive “secret to get secrets” problem that traditional secrets management creates. Cloud platforms provide this identity foundation through existing mechanisms like IAM roles and service accounts.

- Builds in compliance automation: Every access decision generates detailed logs showing workload identity, requested resource, policy evaluation, and outcome. Unlike static credentials that show “who had access,” this approach logs “who accessed what, when, and under what conditions”—providing the granular attribution that SOC 2 and ISO 27001 frameworks demand.

Implementation Strategies Across Workloads

Moving from static secrets to dynamic authorization requires a structured approach that minimizes disruption while maximizing security improvements. Organizations that succeed treat this as an infrastructure transformation, not a simple tool replacement.

Phase 1: Discovery and Foundation Setting

- Inventory current secrets and access paths. Document every static secret currently in use—API keys in repositories, database passwords in configuration files, service account tokens in CI/CD systems.

- Create a dependency map showing which workloads rely on each credential. This baseline drives prioritization decisions and prevents surprises during migration.

- Establish identity foundations. Enable workload identity sources before attempting credential replacement. Configure AWS STS for EC2 and Lambda workloads. Ensure Kubernetes service accounts have proper RBAC permissions. The identity infrastructure must function reliably before eliminating stored credentials.

Phase 2: Choose Deployment Patterns

- Choose deployment patterns based on workload characteristics. Containerized applications work well with Kubernetes sidecars that intercept traffic and inject tokens transparently. Legacy infrastructure running on VMs or bare metal needs agent-based approaches that integrate with existing authentication flows. Serverless functions require extensions that handle credential injection during cold starts. Legacy applications that can’t be modified benefit from edge gateways that manage authentication externally.

- Design least-privilege policies from the start. Begin with read-only permissions and expand based on observed behavior. Create workload-specific policies that grant access only to resources the service actually needs.

- Avoid broad “allow all” policies that recreate the over-provisioning problems of static secrets. Policy templates help teams get started without building everything from scratch.

Phase 3: Integration and Testing

- Integrate security posture signals for conditional access. Connect with appropriate tools for endpoint security status, cloud security posture tools, or similar platforms that provide real-time risk assessment. Policies can then consider workload health alongside identity when making authorization decisions.

- Pilot in non-production environments first. Measure key metrics: token lifetime appropriateness, denied request rates, and authorization latency.

- Target sub-20ms response times for policy decisions. Monitor application behavior to ensure dynamic authorization doesn’t break existing functionality. Use this phase to refine policies and identify integration issues.

Phase 4: Production Rollout and Automation

Expand gradually with automated validation. Roll out to production workloads incrementally, starting with the least critical services. Integrate policy testing into CI/CD pipelines to catch configuration errors before deployment. Monitor audit logs to verify that access patterns match expectations and policies are working as designed.

Common Challenges and Solutions

- Over-permissive policies often emerge during initial implementation. Teams create broad “allow *” rules to avoid breaking applications.

- Solution: Fix this with namespace scoping and resource-specific tagging that enables granular permissions without complexity.

- Token issuance failures typically stem from infrastructure issues.

- Solution: Check for clock skew between policy engines and workloads. Verify that metadata services are reachable from all deployment environments. Network connectivity problems between workloads and identity providers cause most authorization failures.

- Latency spikes can occur when policy engines become bottlenecks.

- Solution: Enable local caching with 60-second TTLs to reduce repeated policy evaluations. Deploy policy engines close to workloads to minimize network round-trips.

Proven Results from Production Deployments

Organizations implementing dynamic authorization report measurable improvements in both security posture and operational efficiency.

- Implementations of dynamic authorization across internal services have delivered significant reductions in credential operations, saving substantial engineering effort from manual secret management tasks.

- Firms have achieved significant return on investment after deploying dynamic authorization to unify access control across multi-cloud environments. These implementations provide unified audit logging and eliminate compliance violations from duplicated static credentials.

Both examples demonstrate that transitioning from static secrets to dynamic authorization delivers immediate operational benefits while strengthening security architecture.

Readiness Checklist Before Implementation

Before implementing dynamic authorization, verify your infrastructure meets these foundational requirements:

Infrastructure Readiness:

- Credential inventory complete and dependency mapping finished

- Cloud IAM federation configured across target environments

- Centralized logging pipeline established for audit capture

- Observability tools integrated for monitoring authorization decisions

Implementation Readiness:

- Workload catalog documented with access patterns

- Cross-environment policies tested in staging

- Emergency access procedures defined for policy failures

- Rollback procedures validated for each deployment pattern

Start Your Implementation Now

Start with one low-risk microservice that has predictable access patterns. Choose a service with limited external dependencies to reduce complexity during initial implementation. Use this pilot to validate your policy engine deployment, measure authorization latency, and refine operational procedures.

Organizations benefit from systematic readiness assessment before attempting large-scale deployment. The implementation complexity scales with infrastructure diversity—teams managing simple, homogeneous environments can move faster than those with mixed legacy and cloud-native architectures.

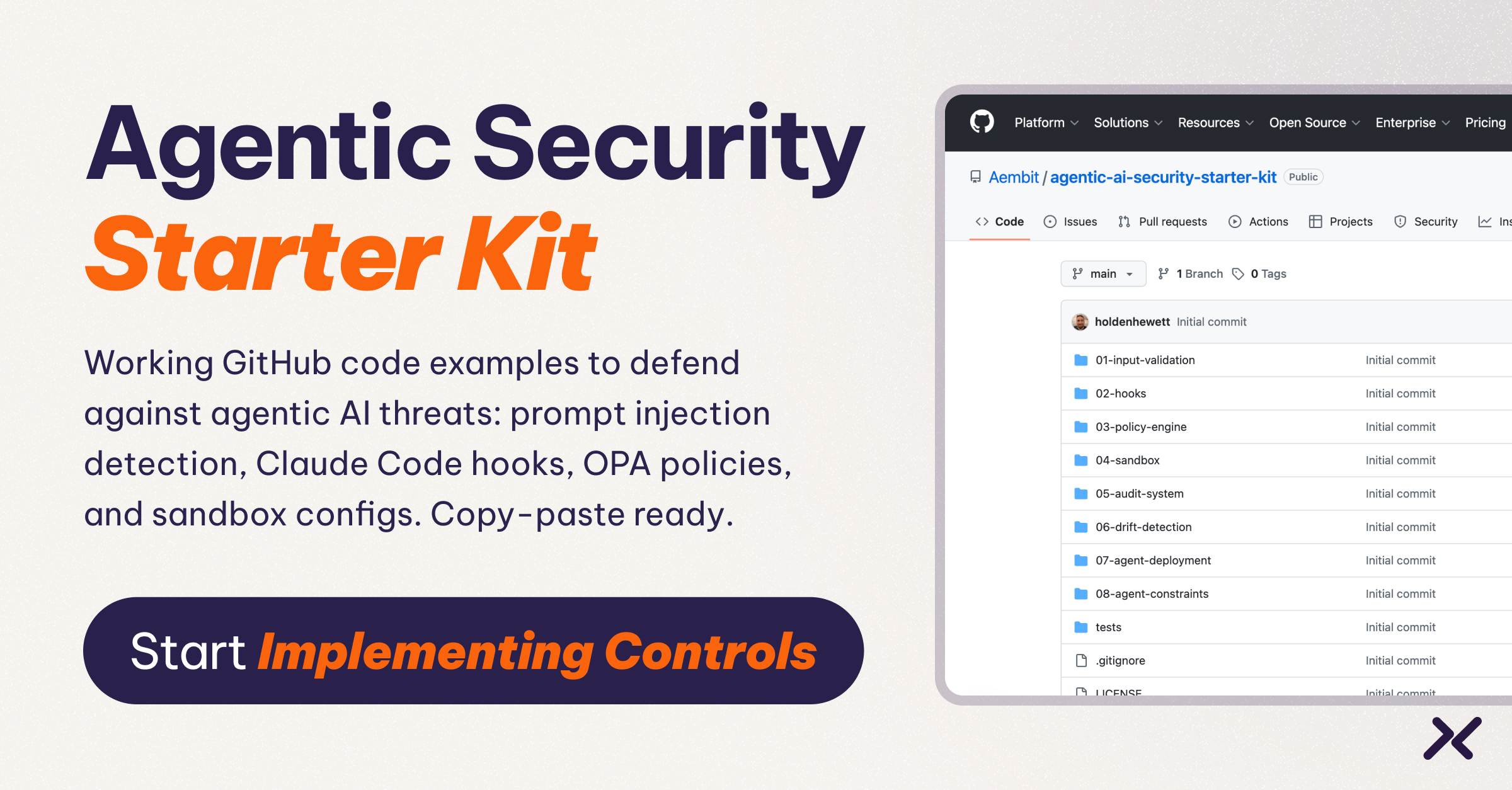

For comprehensive implementation guidance tailored to specific infrastructure patterns, Aembit publishes detailed deployment guides covering Kubernetes, serverless, and multi-cloud scenarios.

The transition from static secrets to dynamic authorization requires careful planning, but the operational and security benefits justify the investment for any organization serious about cloud-native security architecture.