It’s 3:14 a.m., and an AI workload queries a cloud database, pulling customer data to generate a sales forecast. Moments later, it sends the results to an automation script that updates the CRM, triggering downstream workflows.

This isn’t a breach. But for a CISO, it’s the kind of scenario that can spark sleepless nights.

AI-driven interactions like this – entirely free of human involvement – are routine in enterprises today, driving efficiency and enabling real-time decision-making, but also introducing risk.

Two years after ChatGPT’s groundbreaking launch, the transformative potential of artificial intelligence and machine learning is undeniable within the enterprise. According to Gartner, by 2026, more than 80% of enterprises will have used generative AI APIs or deployed generative AI-enabled applications. The benefits are clear: These systems supercharge productivity, enable more informed decisions, and unlock new capabilities in automation and personalization.

But the threats they invite are also profound. Malicious actors are leveraging AI in two distinct ways: to enhance their attack capabilities and to exploit the AI systems embedded in enterprise workflows.

On one hand, cybercriminals use AI to automate phishing campaigns, generate highly convincing fake content, or even craft malware at scale, making their attacks more efficient and harder to detect.

On the other, the growing reliance on AI workloads by enterprises – like customer-facing bots, recommendation engines, and decision-making agents – has created a new attack surface. AI workloads – including LLMs and agents – typically interact with sensitive systems using static credentials like API keys or certificates, which attackers can exploit to impersonate workloads, extract data, or manipulate workflows.

These systems, when secured with legacy methods, become vulnerable to exploitation through techniques like prompt injection, model manipulation, and secret theft (as we’ll discuss below.)

The recent breach of Hugging Face’s Spaces platform illustrates these risks. While the exposure of authentication tokens was concerning, the platform’s robust architecture helped contain potential damage. Nevertheless, the incident highlighted the importance of properly securing machine identities and access secrets in AI systems.

The Evolution of Identity Management for AI Workloads

Traditional IAM systems, while robust for human identities, need to evolve to address the unique challenges of AI workload authentication and authorization. These non-human identities operate at machine speed, often conducting thousands of interactions per second across complex ecosystems. They don’t follow typical user patterns – no logging in with multi-factor authentication or requesting password resets. Instead, they rely on programmatic access through various forms of machine identity.

Moreover, the dynamic and autonomous nature of AI workloads means they are constantly evolving, learning, and interacting with other systems. This creates new challenges for access management that go beyond traditional IAM capabilities. As highlighted in NIST’s AI Risk Management Framework, released in July, admitted that to secure the AI attack surface “conventional cybersecurity practices may need to adapt or evolve.”

The Attack Surface: Six Key Threats to AI Workloads

According to OWASP’s Top 10 Risks for LLMs, AI workloads present several unique security challenges. Here are the key threats organizations need to address:

1) Prompt Injection Attacks: Unlike traditional systems that rely on structured queries, LLMs are vulnerable to carefully crafted text prompts that can manipulate their outputs or bypass safety mechanisms, causing them to behave in unintended ways. This creates opportunities for attackers to extract sensitive information or trigger unintended actions.

2) Data Poisoning: While many production AI systems implement safeguards against online learning, data poisoning remains a risk, particularly during model training or updating phases. Attackers might attempt to inject malicious data that compromises the model’s decision-making capabilities.

3) Model Manipulation and Unauthorized Access: Rather than traditional “hijacking,” attackers exploit poorly secured LLM deployments to generate malicious content, manipulate workflows, or misappropriate the model’s capabilities. For instance, an attacker gaining access to an internal LLM’s API could generate convincing phishing emails that appear to originate from within the organization.

4) Secret and Token Exploitation: Static secrets remain a significant vulnerability. API keys, service account tokens, and other sensitive credentials are often hardcoded into scripts or stored insecurely. Once compromised, these secrets allow attackers to impersonate workloads and access sensitive resources.

5) Supply Chain Exploitation: AI workloads rely on extensive supply chains, including third-party libraries, pretrained models, and cloud APIs. Vulnerabilities in these dependencies can provide attack vectors for system infiltration.

6) Excessive Autonomy: AI agents operating autonomously need careful constraints. Without proper guardrails, attackers can exploit logic flaws or misconfigurations to manipulate these systems indirectly.

Actionable Strategies to Secure Access to AI Workloads

Securing AI workloads requires a multifaceted approach. These systems don’t operate in silos—they interact with sensitive data, communicate across complex infrastructures, and rely on vast supply chains. Protecting them demands a blend of traditional and modern security practices tailored to the unique challenges they present.

Here are some strategies to consider:

Deploy Non-Human IAM

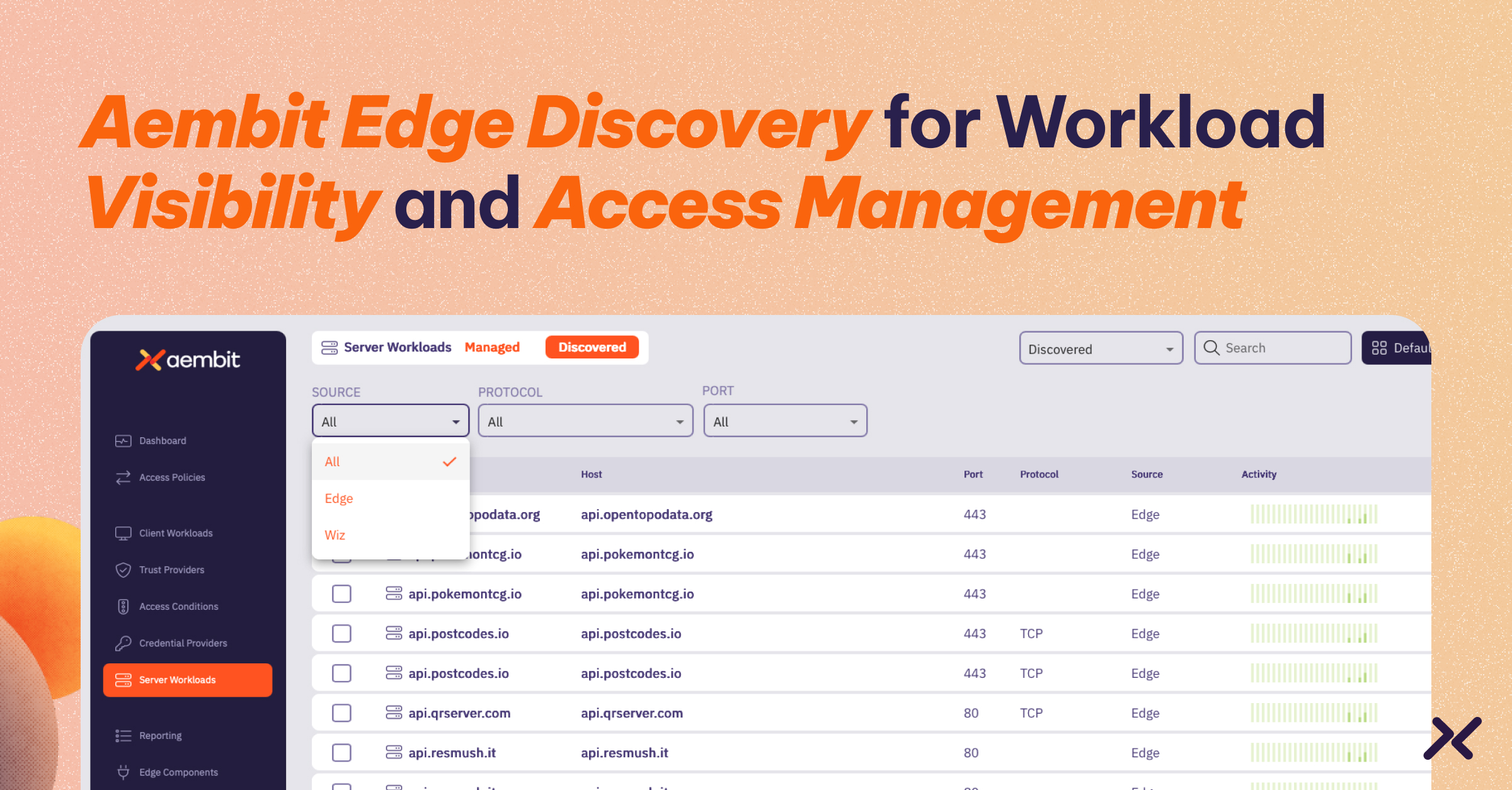

Replace static secrets with modern approaches designed for machine-to-machine communication. Non-human workload IAM solutions help secure AI workloads by:

- Enforcing Policy-Based, Conditional Access: Authenticate and authorize workloads dynamically, using real-time posture checks.

- Implementing Ephemeral, Just-in-Time Credentials: Use short-lived tokens tied to specific workloads, which expire quickly to minimize misuse.

- Standardizing Authentication Protocols: Leverage OAuth 2.0’s client credentials flow to ensure secure and interoperable application-to-service communication.

Enforce Least-Privilege Access

Excessive permissions in AI workloads introduce unnecessary risks. Reduce exposure by:

- Implementing Granular, Context-Aware Access Controls: Limit access to only the resources necessary for specific tasks.

- Dynamically Adjusting Permissions: Modify permissions based on workload behavior, posture, or environmental factors.

- Applying Task-Specific Constraints: For example, an AI fraud detection model should only access data relevant to its function.

Continuously Monitor Workload Behavior

AI workloads require constant vigilance, as they operate differently from human users. Strengthen security by:

- Using Anomaly Detection Tools: Identify unusual patterns, such as unexpected resource access or data transfers.

- Automating Responses: Isolate suspicious workloads and alert the security team immediately upon detecting anomalies.

- Establishing Baseline Behavior: Compare real-time activity against expected patterns to quickly identify deviations.

Secure the AI Supply Chain

AI workloads depend on a complex ecosystem of third-party tools and libraries. Protect this chain by:

- Validating Dependencies: Use automated vulnerability scans and cryptographic checks to ensure third-party components are secure.

- Testing in Sandbox Environments: Isolate and assess third-party tools before integrating them into production systems.

- Avoiding Unverified Integrations: Ensure only thoroughly vetted components are used in live environments.

Limit Autonomy with Guardrails

Autonomous AI workloads can pose risks if left unchecked. Mitigate these risks by:

- Establishing Governance Frameworks: Define parameters to ensure workloads operate within acceptable boundaries.

- Integrating Human Oversight: Trigger reviews for high-stakes actions, such as financial approvals or regulatory decisions.

- Applying Logical Constraints: Prevent exploitation by setting clear operational thresholds for autonomous agents.

To try the Aemibit Workload IAM platform for free and learn how it can help you secure AI and LLMs, visit aembit.io.

The Workload IAM Company

Manage Access, Not Secrets

Boost Productivity, Slash DevSecOps Time

No-Code, Centralized Access Management