AI identity is not yet a fully formed concept.

We have a concept of identity for humans (workforce and customers), and we have identity for non-humans (applications, workloads, scripts), but as we will explore, AI requires a little bit of both. And that may mean something new entirely.

It’s likely your developers are not thinking about what an AI identity is, nor how it should be managed. They are thinking, instead, about how to make an agent do what they want it to do.

And there’s nothing wrong with this: As an emerging technology, agentic AI’s first hurdle is to achieve new, powerful outcomes. Everything else will follow.

We have hopefully learned enough in our industry such that, as agents come into mainstream enterprise use, we will build security in from the start. (Hey, I can dream, can’t I?)

While there are many factors to consider with agentic AI security, I’ve been focusing my time talking to experts about the complex challenge of identity management for AI agents.

Central to this challenge is a crucial question: What kind of identity should your AI agent possess? Should your AI agent adopt a human-like identity, a strictly non-human identity, or something else?

Ask three developers and you’ll get four opinions. In such a quickly evolving space, these answers will change just as rapidly. But let’s use this opportunity to frame the debate.

Why Identity Matters for Agentic AI

We’ve all heard the adage “Identity is the new perimeter,” but identity for AI agents introduces a new class of challenges.

Identity itself provides many benefits. Identity controls access, identity is a form of security, identity forms a basis for auditing. Thus, the idea of having an identity for AI isn’t far-fetched. Traditional human identity systems are designed around predictable behaviors, stable access patterns, and long-lived entities. In contrast, AI agents are dynamic, ephemeral, and autonomous.

In that latter case, agentic AI sounds a whole lot like a non-human identity (NHI). Read the link to compare it for yourself, but in this context, agents appear like workloads that spin up, do their job, and shut down.

So, case closed? AI is non-human, right? Not so fast.

One of the big differences between AI agents and NHIs is their deterministic nature. NHIs, like applications and scripts, have a static set of capabilities, workflows, and hence permissions they need provisioned, even if the workloads are highly ephemeral.

Agents may spin up on demand, call APIs across domains, and generate actions based on their own reasoning rather than direct human input. As such, managing identity for AI agents must accommodate not only the basics – authentication, authorization, and auditability – but also deeper concerns around autonomy, delegation, contextual reasoning, and lifecycle boundaries.

AI agents are designed to take whatever actions are necessary to accomplish a goal – often with no fixed sequence or predefined access pattern. As a result, their actions may vary from one activity to the next, and even from one attempt to the next, resulting in non-deterministic outcomes, non-deterministic actions, and hence non-deterministic needs.

In essence, AI agents push the boundaries of identity beyond static provisioning. They require identity systems that are not just reactive but also predictive and adaptive – capable of understanding and enforcing identity posture in environments where the actor is constantly shifting roles, context, and risk profile.

Identity for AI is strictly an “it depends” type of answer today, but hopefully as the space matures we can answer it more concretely. In the meantime, let’s take a look at three different AI agents and see how their requirements drive an identity requirement.

Scenario 1: When an AI Agent Behaves Like a Human

Consider a customer support chatbot integrated into customer relationship management (CRM) and communication platforms. The chatbot uses retrieval-augmented generation (RAG) to dynamically retrieve and present customer data merging it with an LLM trained on documentation and a knowledge base.

This AI agent is performing tasks indistinguishable from human agents. This human-like identity approach is compelling because it simplifies integration into existing human-centric workflows. So, should we just give our AI agent a username and assign record in Okta so it can operate like any other employee? Or more likely, just delegate it a set of your permissions via OAuth, so that it can act on your behalf?

This idea of delegated authority for AI agents introduces notable complexities. Human-like identities integrate smoothly with traditional identity and access management (IAM) providers like Azure AD, Google Workspace, or Okta, yet this ease comes with risks.

Overly broad permissions – intended for human flexibility – can lead to serious security vulnerabilities, including privilege escalation and increased potential for breaches. Scopes that might be acceptable for human users, due to their judgment and context awareness, can become serious vulnerabilities when granted to AI agents operating autonomously.

Scoping that isn’t viewed as a major risk for a human actor because of the assumption of good human judgement can become significant risks at the hands of an AI agent that will gladly analyze scope and take advantage of broader permissions in order to achieve its goal.

Just think about the human who inadvertently delegates admin authority to a critical system, and let your mind wander for a few minutes on what could go wrong.

Further complicating matters, human-like identities for AI can obscure clear audit trails. Actions taken by AI agents might be indistinguishable from genuine human actions during incident responses or compliance audits. This opacity can present significant regulatory hurdles, particularly within highly regulated industries like finance or health care.

Scenario 2: When an AI Agent Acts Like a Nonhuman

Strictly non-human identities distinctly separate AI agents from human users, acknowledging their unique lifecycle and responsibilities. Take, for instance, an autonomous fraud detection AI operating within financial services. Such an AI agent continuously interacts with transactional systems.

Given the highly sensitive nature of its tasks – the fact that it operates outside of the bounds of any single developer – and its autonomous decision-making capabilities, it clearly requires a non-human identity distinct from the humans who develop or oversee it.

Non-human identities reflect the operational requirements and lifecycle management of AI agents in the classic sense of a workload – a piece of software that humans have built to automate a task. Unlike human identities, workloads (of which AI agents may be considered one type) may frequently require rapid provisioning, rotation, or revocation, driven by algorithm updates, changing contexts or deployment to new environments.

These identities interface with services not through a UI, but through secure API calls or message queues (e.g., Kafka or RabbitMQ), using credentials tailored specifically for machine-to-machine communication, such as OAuth 2.0 client credentials, JSON Web Tokens, or mutual TLS (mTLS).

From a security perspective, non-human identities minimize risk by limiting permissions exclusively to necessary operations, precisely the type of granularity required in modern Zero Trust architectures. They simplify auditability and compliance since their access and actions are sharply defined, explicitly logged, and clearly differentiated from human users.

However, implementing this robust security approach introduces complexity. If you’re like most organizations, you’re not managing access for workloads – you’re managing secrets. You don’t have an IAM system for workloads – you have a secrets database.

Non-human identity access management requires careful management of fine-grained permissions and role assignments, demanding focus and IAM tooling. This dynamic underscores the necessity for Workload IAM systems much like human IAM systems – automating credential lifecycle management, integrating tightly with AI operational patterns.

You don't have an IAM system for workloads. You have a secrets database.

Scenario 3: When an AI Agent is Both Human and Nonhuman

If we’re honest, neither of the above scenarios accurately capture what we are building in AI agents today. AI agents already don’t fit neatly into the human and non-human world. They can move between the two, using delegated human permissions for certain data and non-human access for other data. So, is there another form of identity that better suits this purpose?

For the purpose of this discussion, let’s call them agentic identities.

Agentic identities represent a nuanced combination of human and non-human identities within a single AI agent. Rather than merely falling between human and non-human, agentic identities combine both, allowing an agent to act with user-delegated authority in one moment and autonomous privileges in the next.

For example, a health care AI agent may assist in managing patient data by accessing electronic health records (EHRs) through delegated permissions granted by healthcare providers. This ensures that the agent acts within the scope of the provider’s authority.

Additionally, these agents use non-human credentials to interact with hospital information systems, scheduling tools, and billing systems, facilitating tasks like appointment scheduling and insurance claim processing autonomously.

The fundamental challenge of agentic identity lies in accurately determining when an AI agent is performing human-like tasks versus purely automated tasks. Addressing this requires advanced contextual reasoning capabilities within the identity management system, which can dynamically differentiate and assign appropriate identities based on operational context. Rather than relying solely on traditional identity federation or conditional access, agentic identities may themselves need an AI-driven decision engine to determine their needs not for “all time” but “for this action” – a true least-privilege approach.

An IAM platform designed for gentic AI would need to continuously evaluate context, roles, behaviors, and permissions to determine the appropriate identity type – human or non-human – and the appropriate scope to employ for any given action.

While offering operational flexibility, this sophisticated approach would substantially increase IAM complexity, requiring significant expertise and ongoing management.

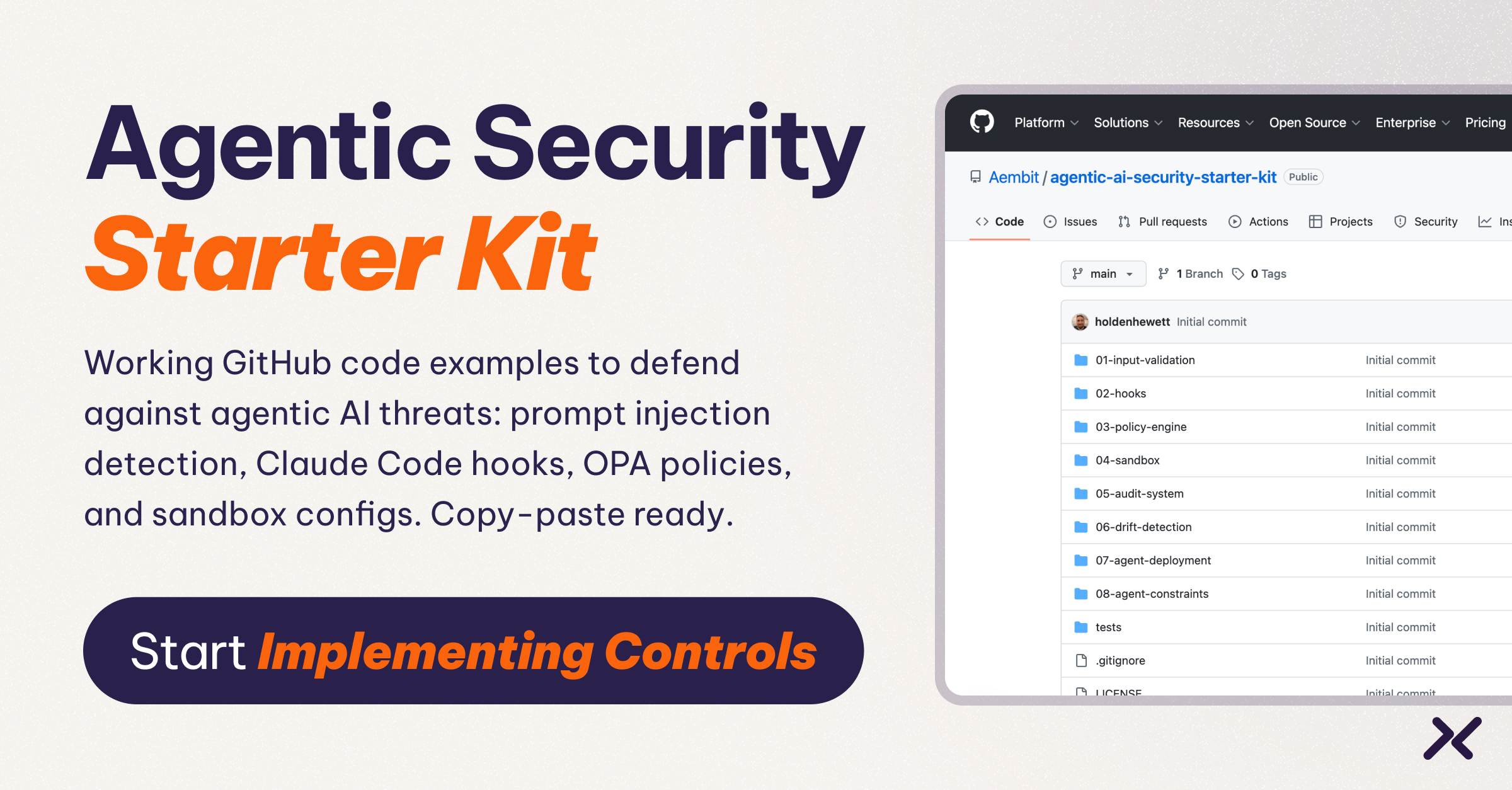

What You Can Do Today to Secure AI Identity

One day, there will be IAM for AI agents, like there are for humans and non-Humans.

We’re not there today, and our understanding of managing agents via identity is just beginning to coalesce. As we work toward this goal, there are some approaches you can take today to secure your AI agents as you test and release them:

- Map each agent’s access needs to its function, and classify agents based on their operational scope. Choose a non-human identity when the agent operates autonomously across many sessions or tasks, and a human identity when the agent is acting specifically on behalf of a given individual.

- Consider using scoped credentials for agents that switch between user-delegated and autonomous modes.

- Carefully consider how you will log this set of behaviors, as they may cross multiple IAM systems.

Looking Ahead: IAM for Agentic AI

Identity for AI is trailing just behind the capabilities we’re building into agentic systems.

Not only will we need it to authenticate agents to sensitive data and resources, we will require it to secure our most critical systems.

Today we must make do with the use of binary IAM systems for humans and non-humans, but the new operating model of AI agents may soon drive a new form of access management.

The Workload IAM Company

Manage Access, Not Secrets

Boost Productivity, Slash DevSecOps Time

No-Code, Centralized Access Management